The cognitive psychologist reimagining STEM learning

Nia Nixon is bringing inclusion, belonging, and AI collaboration to STEM

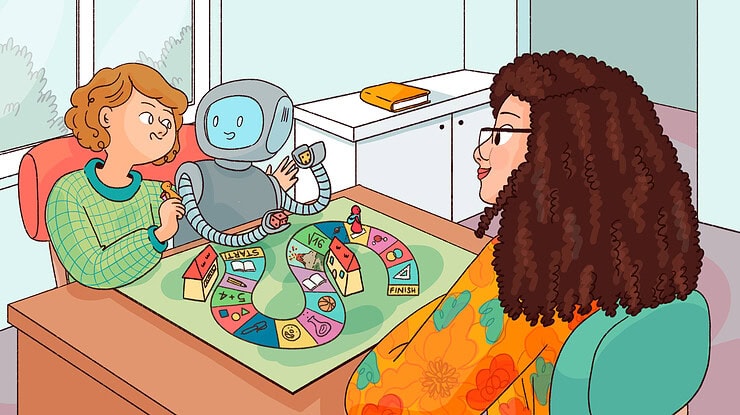

Nia Nixon studies how human-AI teams can foster more inclusive, supportive group learning environments in STEM disciplines. Annie Brookman-Byrne finds out more.

Annie Brookman-Byrne: What questions are you asking in your research on STEM learning?

Nia Nixon: What happens when students collaborate with AI – not just as a tool, but as a teammate? This deceptively simple question is at the heart of my work. In my lab, we explore how AI can be a partner that listens, nudges, and helps shape equitable collaboration.

This research is urgently needed because innovation increasingly happens through collaboration in science and technology. Yet many students don’t experience collaboration as a context where they feel heard, valued, or empowered, especially girls and individuals from underrepresented racial groups. By redesigning AI’s role in these settings, we have a chance to shift the dynamics and make team-based learning more inclusive from the ground up.

“We have a chance to shift the dynamics and make team-based learning more inclusive from the ground up.”

ABB: How has the field changed?

NN: The field has evolved from viewing AI solely as a tutor or tool to imagining it as a teammate that listens, adapts, and supports. Ideally, AI should be able to take on social roles, adjust to human needs, and build trust over time. These qualities are critical in collaborative learning.

My own work builds on these ideas by exploring how AI teammates shape interpersonal dynamics, particularly around inclusion and identity. AI can either amplify existing inequalities or help us reimagine collaborative learning spaces, depending on how it’s designed. Generative AI can expose power dynamics in team interactions and support more equitable engagement, especially for girls and underrepresented students in STEM fields.

My PhD student Mohammed Amin Samadi developed a platform that enables researchers to explore how different AI teammate behaviors and personas shape group collaboration. This marks a pivotal shift in how we conceptualize and study AI’s role in fostering inclusion, belonging, and equitable participation in team-based learning. The field of AI learning is moving beyond automation toward building AI systems that act as cognitive and emotional partners.

ABB: How will your research help children?

NN: Collaboration, adaptability, and AI fluency are essential skills in our world. But for many children, especially those from marginalized communities, group work can be alienating or discouraging. Our research aims to change that. We use AI to detect subtle dynamics in a learning group, for example identifying who’s being ignored, who’s contributing ideas, and how emotional tones are shifting over time. These insights power our AI teammate interventions to deliver real-time, equity-centered nudges that support inclusion, regulation, and belonging.

Importantly, our work also reveals the risks of bias in AI. A recent study by my PhD student Jaeyoon Choi showed gender bias in how ChatGPT assigned leadership roles – male students were assigned more dominant, assertive roles, while women were assigned collaborative, supportive roles. We need intentional, bias-aware design in collaborative AI systems.

Through our platform, we will test AI teammates in labs in the US and Germany to understand not only what works, but what’s fair, culturally responsive, and truly empowering for all children. By shaping more equitable collaboration today, we’re giving kids the tools and confidence they’ll need to thrive in tomorrow’s AI-rich world.

ABB: What are the biggest mysteries in your field?

NN: We’re still figuring out what it means for AI to be a good teammate. Can it recognize subtle interpersonal cues? Can it build trust across differences? What happens when children disagree with AI—or ignore it? There’s also the ethical puzzle: How do we ensure that AI teammates don’t unintentionally reinforce bias or promote surveillance-like dynamics? My work aims to tackle these questions by combining cognitive science with social psychology and learning sciences to understand how communication, identity, and power interact in human-AI teams.

“I hope we will succeed in designing AI that’s less about control and more about care.”

ABB: What are your hopes for the future of AI learning?

NN: I hope we will succeed in designing AI that’s less about control and more about care. Systems that listen more than they talk. AI that supports—rather than replacing—human educators and peers. I also hope we will stop building “universal” models and start designing for diversity from the ground up. Inclusion has to be the foundation, not an afterthought. Ultimately, I’d love to see AI teammates become part of a larger movement to reimagine classrooms as places where every student, regardless of background, feels seen, valued, and empowered to help shape the future of science and technology.

Footnotes

Nia Nixon is an Associate Professor at the University of California, Irvine, where she directs the Language and Learning Analytics Lab (LaLA Lab). Her research bridges cognitive science, education, and AI to explore how human–AI collaboration can promote more inclusive learning environments. As a 2025-2027 Jacobs Foundation Research Fellow, she is especially focused on how AI teammates can support underrepresented students in STEM disciplines. Nia’s work has been featured in leading journals and international collaborations aimed at shaping the future of equitable education.

Nia’s personal website, lab website. Nia on Bluesky and on X.

This interview was edited for clarity.